Weighted Q-caches in Temporal Attention

While I was searching for methods to improve visual consistency, two papers jumped out at me:

- ConsiStory: Training-Free Consistent Text-to-Image Generation

- Cross-Image Attention for Zero-Shot Appearance Transfer

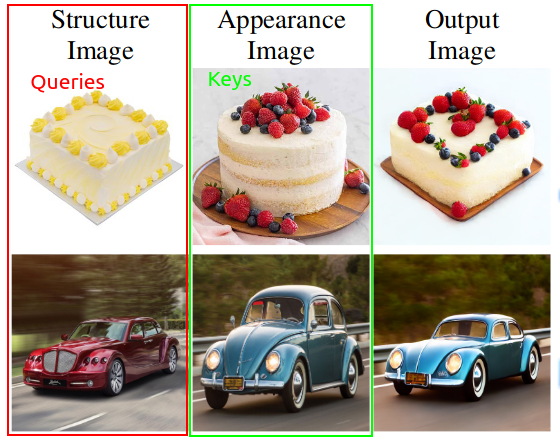

Research indicates that in denoising 3D U-Nets with attention mechanisms, the queries carry structural information.

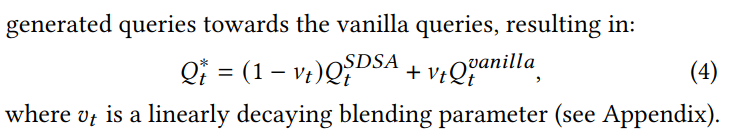

In ConsiStory, they use vanilla query features to improve the diversity of the generated image while maintaining the structure. They introduce blending of encoder and decoder queries in their Subject Driven Self-Attention modules.

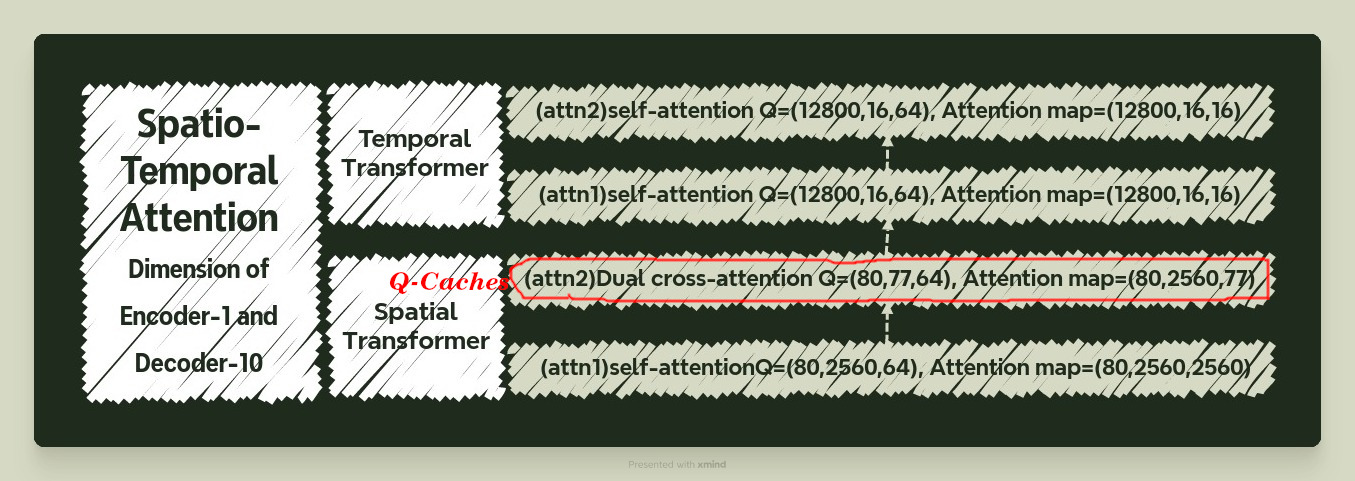

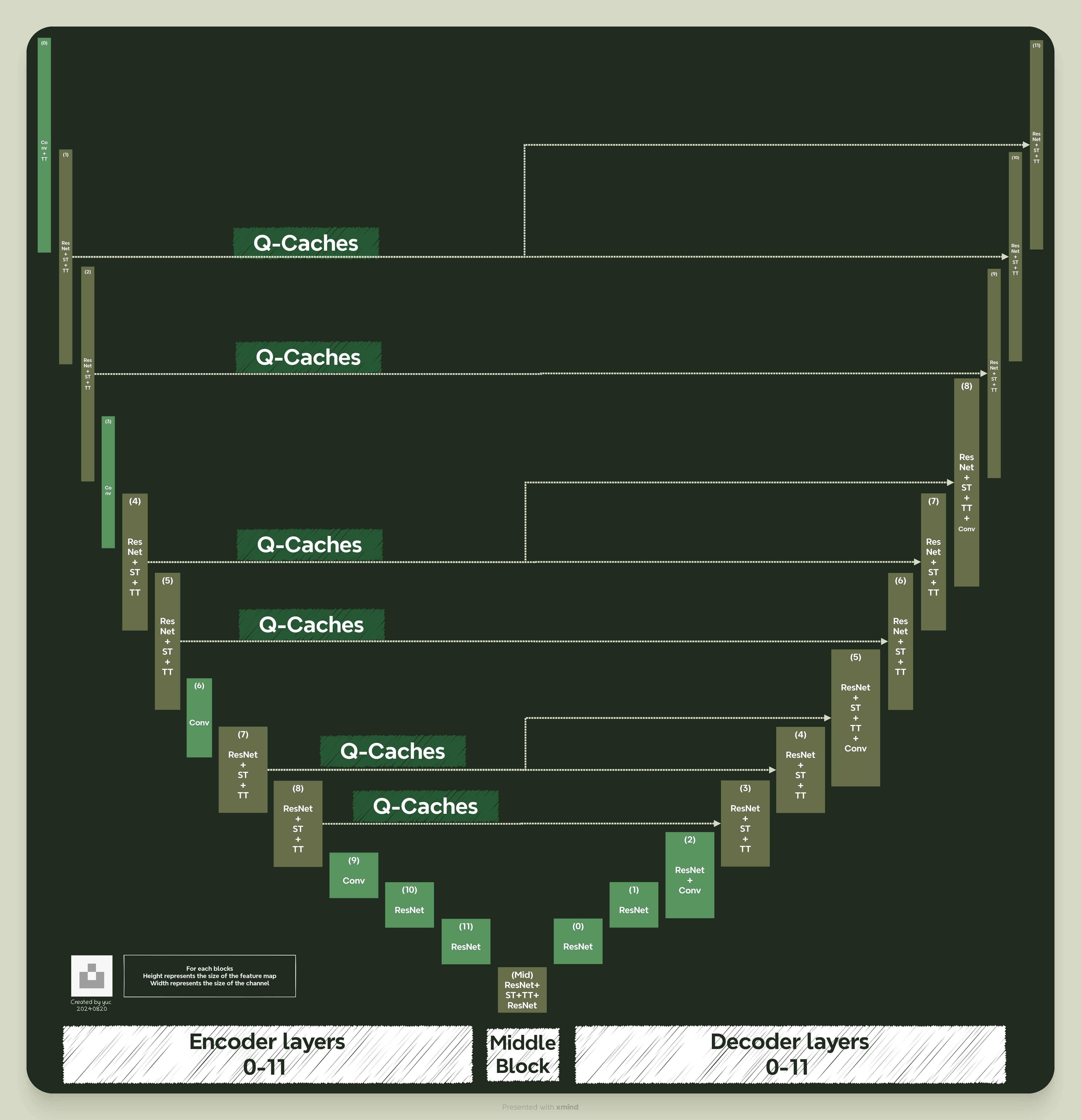

Inspired by these insights, I went on to identify actionable codes in VideoCrafter’s 3D U-Net. The first challenge I noticed is that “not all layers are transformers.” As shown below, the blocks marked in olive green are transformers (ST-attention). This makes it impossible to map queries directly from each encoder layer to the decoders.

Since direct mapping wasn’t possible, I took a manual approach. I selected corresponding queries from the encoder to map to the decoder, ensuring they have the same dimensions for weighted addition. Nothing too complex — just making sure the pieces fit together perfectly.

\[Q^* = (1-v_t)Q_{\text{decoder}} + v_t \underbrace{Q_{\text{cache}}}_{=Q_{\text{encoder}}}\]I tried experimenting with different values for \(v_t\) to see if I could improve the results and started following the paper with linearly decreased \(v_t=[0.9, 0.8]\), but they didn’t make a big difference. So, I stuck with a fixed value of 0.9. This means that 90% of the \(Q_{\text{decoder}}\) are replaced by the one from the encoder.

Since I aimed to enhance temporal visual consistency, I targeted the self-attention modules in the temporal transformer for applying Q-caches. Here’s the breakdown:

- Shape of Q = (batch, frames, vector length) = (12800, 16, 64)

- Shape of Self-attention map \(QK^T\): (12800, 16, 16)

In simpler terms, each “pixel” on the feature map (40x64) gets processed by 5 heads, which results in 12800 batches. The self-attention mechanism focuses solely on frame-to-frame relationships, with each frame represented by a vector of length 64.

By applying Q-caches to the temporal transformer, I hope to consistently improve the “frame-to-frame structure” as the video progresses. While “structure” is a bit abstract, consider it the underlying pattern that might hold the footage together.

Results

| FIFO-Diffusion | + Q-Caches |

|---|---|

| "a bicycle accelerating to gain speed, high quality, 4K resolution." | |

|

|

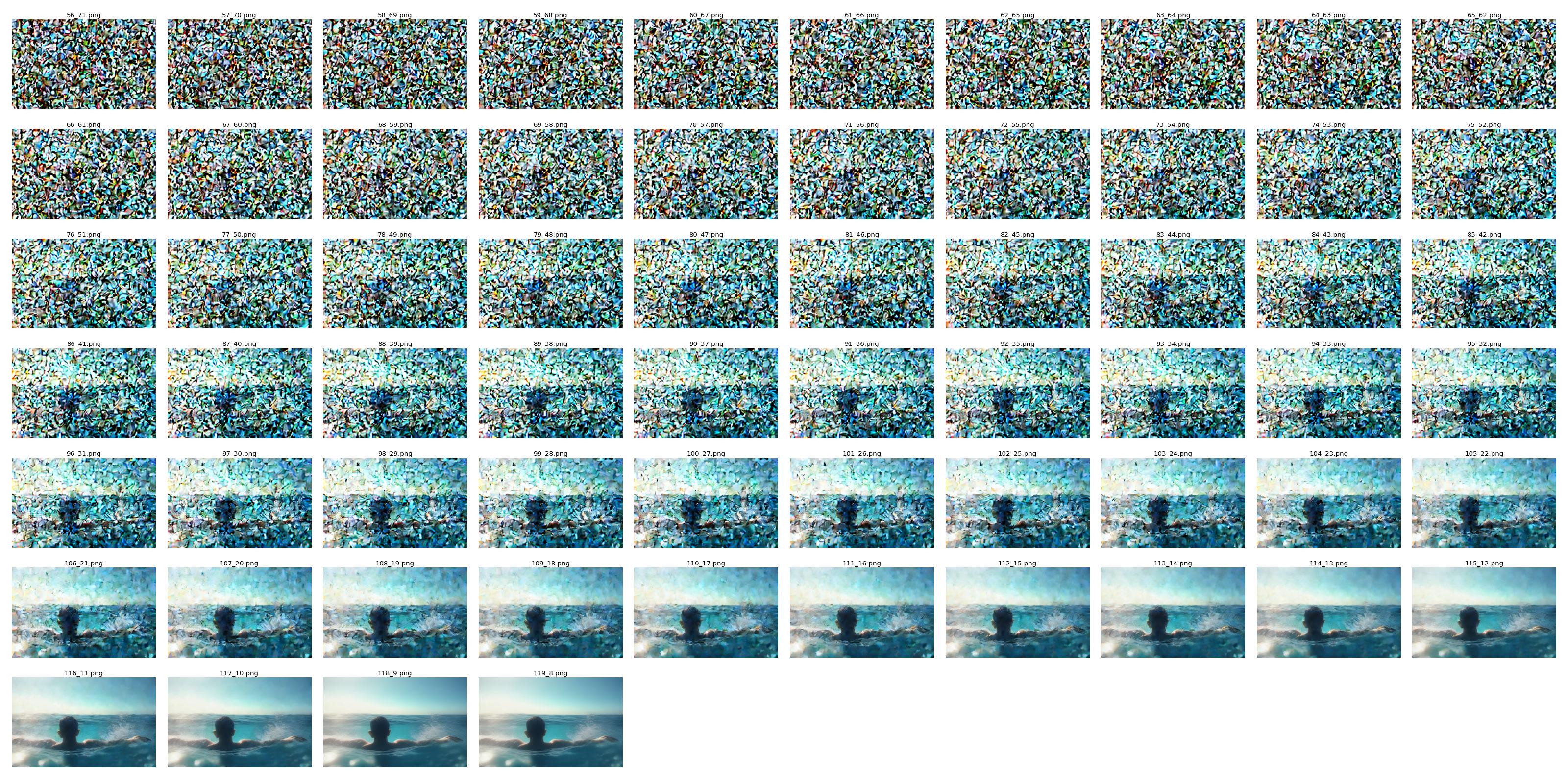

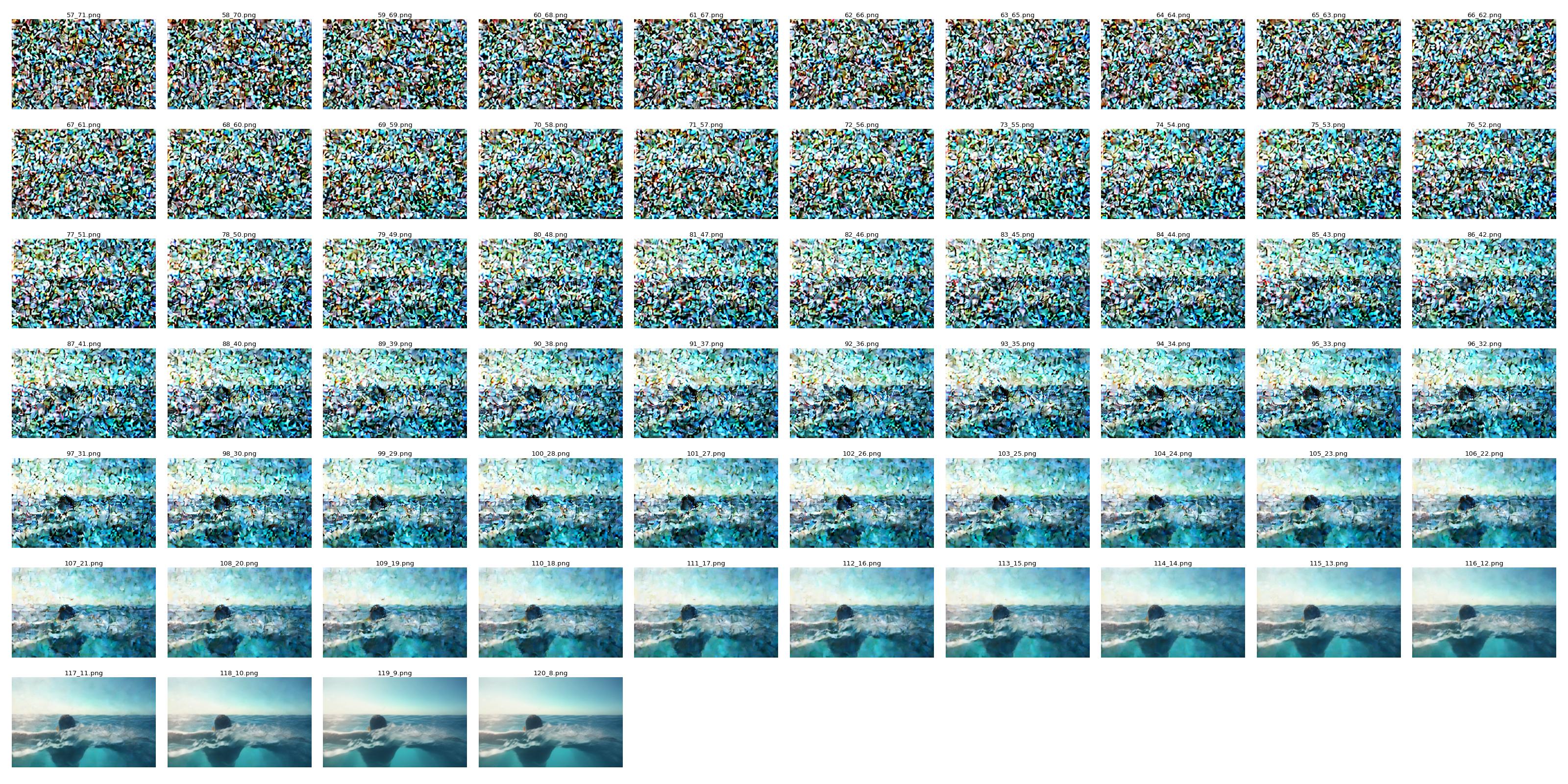

| FIFO-Diffusion | + Q-Caches |

|---|---|

| "a person swimming in ocean, high quality, 4K resolution." | |

|

|

| Improved Segment (Frame 85-95) | |

|

|

| FIFO-Diffusion | + Q-Caches |

|---|---|

| "a car slowing down to stop, high quality, 4K resolution." | |

|

|

| Improved Segment (Frame 73-80) | |

|

|

| FIFO-Diffusion | + Q-Caches |

|---|---|

| "a person giving a presentation to a room full of colleagues, high quality, 4K resolution." | |

|

|

| Improved Segment (Frame 100-110) | |

|

|

Take the “a person swimming in the ocean” video as an example — there’s still some inconsistency between frames 119 and 134. In this part of the video, the person’s back flips unnaturally when the camera dives below the ocean surface.

| Inconsistancy around frame 119-134 (FIFO+Q-Caches) |

|---|

|

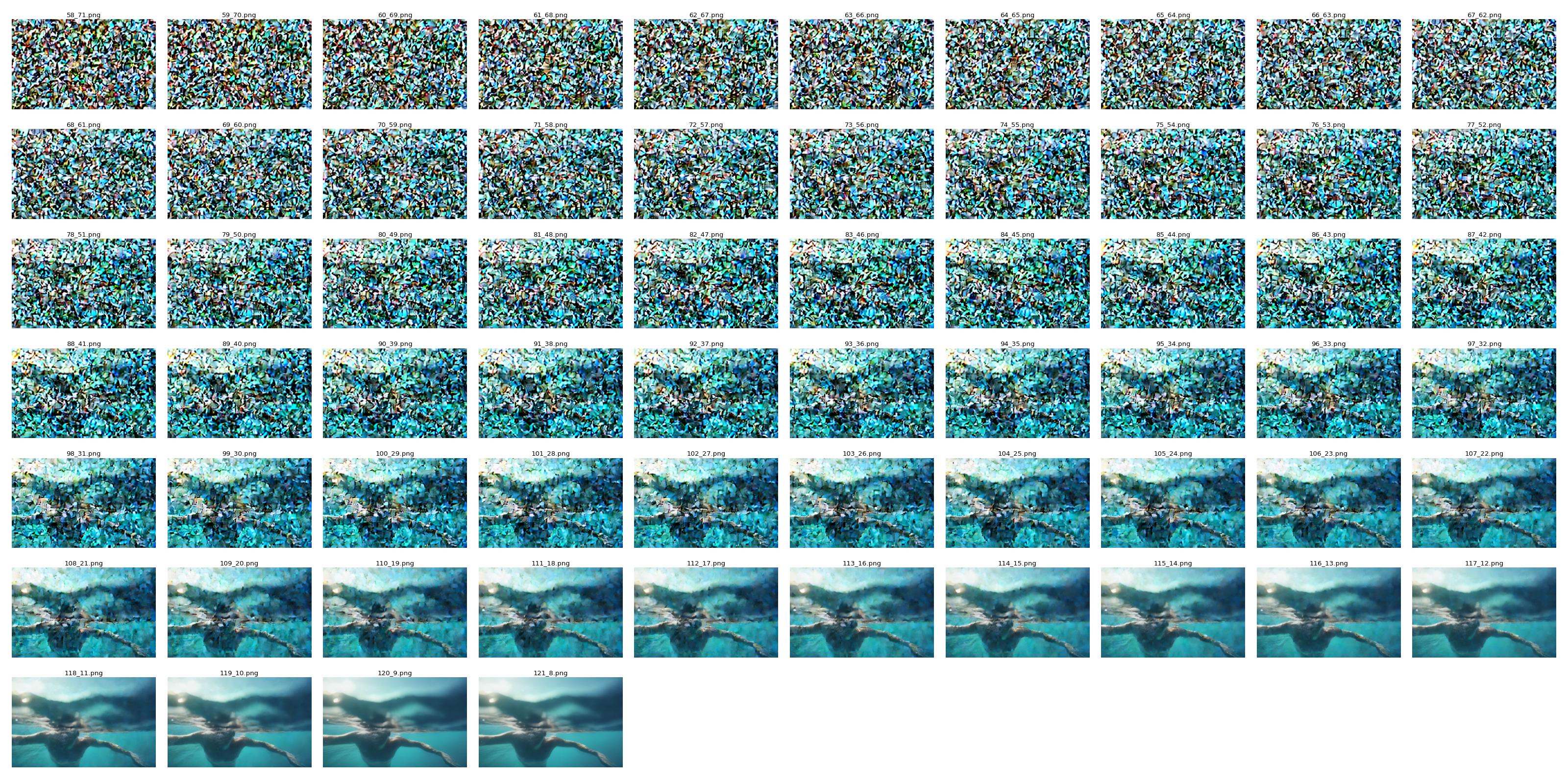

I looked closely at the denoising process for some problematic frames (119, 120, 121). In the figure below, the top-left item represents noise generated from a normal distribution \(z \sim \mathcal{N}(0, 1)\) (code).

| Denoising step by step of the Frame 119 | |

|---|---|

|

|

| Denoising step by step of the Frame 120 | |

|

|

| Denoising step by step of the Frame 121 | |

|

Since I’m using FIFO-Diffusion to make long videos, we’re working with a technique called “diagonal denoising.” It’s a bit complicated, so let’s dive deeper into it in my next post — Extending the Latent Uniformly.

Comments