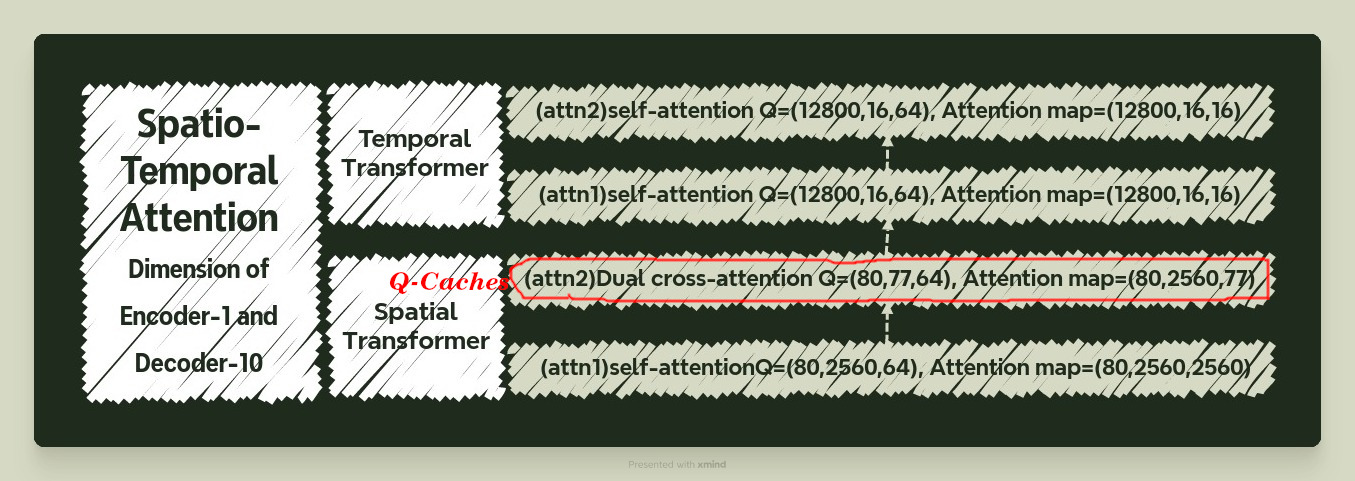

Anywhere Q-caches

Remember when we tried Q-caches in Temporal Transformer and it was a success?

Well, there were some pretty magical results during experiments when I applied Q-caches to the Spatial Transformer’s second attention module.

Q-caches at Spatio Transformer’s 2nd attention module

|

|

|

|

|

|

Comments