Seeding the Initial generated frame as the image embedding

The original FIFO-Diffusion supports only text-to-video generation, but out of curiosity, I decided to extend it to handle image-to-video as well, adapting from the VideoCrafter. Below are some results using input images and texts from VBench:

| Input Image | VideoCrafter | Extended by FIFO-Diffusion |

|---|---|---|

| "a city street with cars driving in the rain, high quality, 4K resolution." | ||

|

|

|

| "an older woman sitting in front of an old building, high quality, 4K resolution." | ||

|

|

|

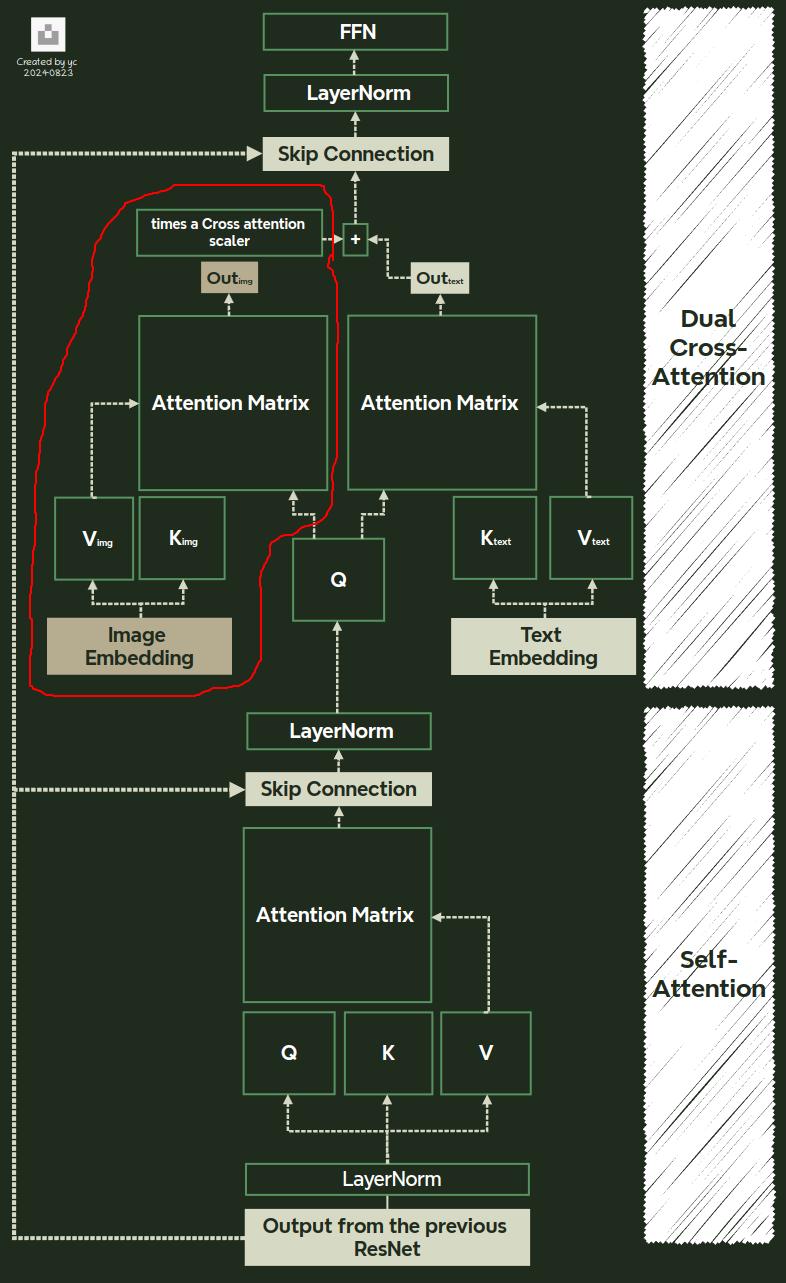

The main difference between text-to-video (T2V) and image-to-video (I2V) generation lies in the Spatio-Temporal Attention Mechanisms. If you’re curious, check out my previous posts for more details. In short, it’s all about the red-marked area in cross-attention where the image embedding interacts with latents and text at every layer of the denoising process.

The conditional image embedding strongly guides the diffusion model in creating frames that stay true to the input image. This got me thinking — If we have the image embedding in text-to-video pipeline, could it make the generated video more visually consistent?

Luckily, FIFO-Diffusion is built on an existing fixed-length video generator. That means we already have an initial image!

Pseudo code:

def concat_text_img_emb(model, frame1, text_embbeding):

# frame1 is the first frame in latent space (from the pre-trained T2V model)

# Shape of frame1 = (4, 40, 64)

# channel=4 (latent space channels), and (40, 64) is the sizes of the feature map

# Decode the latent frame to obtain the image tensor

frame_tensor = model.decode_first_stage_2DAE(frame1)

# frame_tensor is the decoded image, shape = (3, 320, 512)

# Where 3 is the number of color channels (RGB), and (320, 512) is the pixel resolution

# Convert the image to image embedding.

first_ref_img_emb = model.get_image_embeds(frame_tensor)

# Concatenate the text embedding with the image embedding along the embedding dimension

context_embbeding = [torch.cat([text_embbeding, first_ref_img_emb])]

return context_embbeding

Experiment results:

| FIFO-Diffusion | Seeding the initial latent frame |

|---|---|

| "a person swimming in ocean, high quality, 4K resolution." | |

|

|

| "a train crossing over a tall bridge, high quality, 4K resolution." | |

|

|

| "a car slowing down to stop, high quality, 4K resolution." | |

|

|

Comments